Qlustar Workload Management

Workload Management

Efficient and scalable workload management is of crucial importance for an HPC cluster. Users expect their compute jobs to get executed as fast as possible and want to get a fair share of the available compute resources. They also want detailed information about their jobs status and convenient tools to manipulate them. On the other hand, cluster administrators are most interested in maximum utilization of their cluster hardware and need simple control over the status of compute nodes.

Qlustar’s workload management is based on the premier open-source software Slurm to achieve all of the above goals. To complement Slurm’s superb feature set, QluMan provides a powerful GUI management front-end for it.

Slurm Integration

Optimal usage of a workload manager is guaranteed only, if all its components are integrated tightly with the remaining functionality of a cluster. The Qlustar Slurm implementation takes care of this with the following available features:

- Fully configured accounting to store and analyze historical data about jobs.

- Cgroup task affinity support enabled per default for process tracking, task management and job accounting statistics. It allows to confine jobs/steps to their allocated cpuset, bind tasks to sockets, cores, threads and to restrict jobs/steps to specific memory or generic resources.

- Fully functional PMIx/PMI2 startup support for Qlustar OpenMPI as the most convenient method to start MPI programs within a job.

- Lua job plugin.

- HDF5 Job Profiling.

- PAM Support to optionally restrict user-logins on compute nodes (see below).

Qlustar Slurm Configurator

The QluMan Slurm module makes the definition of a consistent Slurm configuration extremely simple:

- If selected, a working default configuration is generated and activated automatically during the Qlustar installation process, so cluster users can start submitting Slurm jobs right-away.

- A text-based template provides a convenient and flexible interface for global Slurm properties/options.

- With the same interface, the cgroup configuration file is editable as well.

- Node groups allow the creation of node property sets that can be flexibly assigned to compute nodes.

- Partitions can be created and properties added to them. Afterwards, they can be flexibly assigned to compute nodes as well.

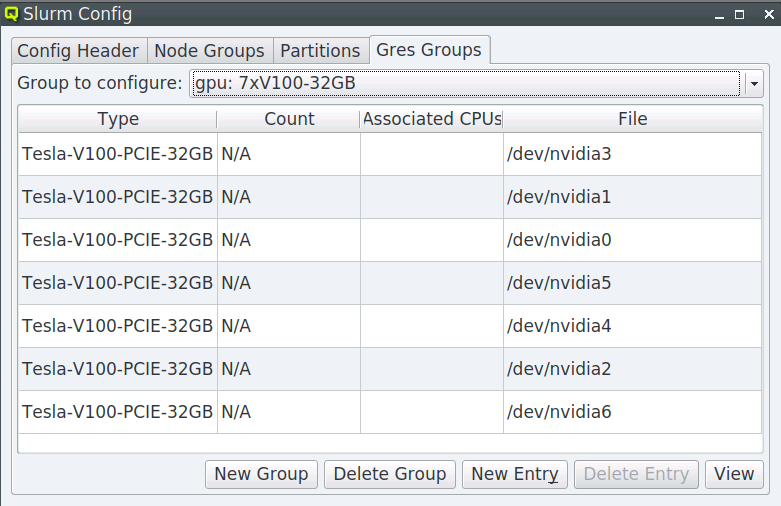

- GRES groups for GPUs can be conveniently defined and assigned to nodes using the GPU wizard.

- When done with the configuration, the resulting slurm.conf can be reviewed together with a diff to the currently active one. Pressing the Write button finally puts the configs on disk and restarts Slurm with the new configuration.

QluMan Slurm Operation

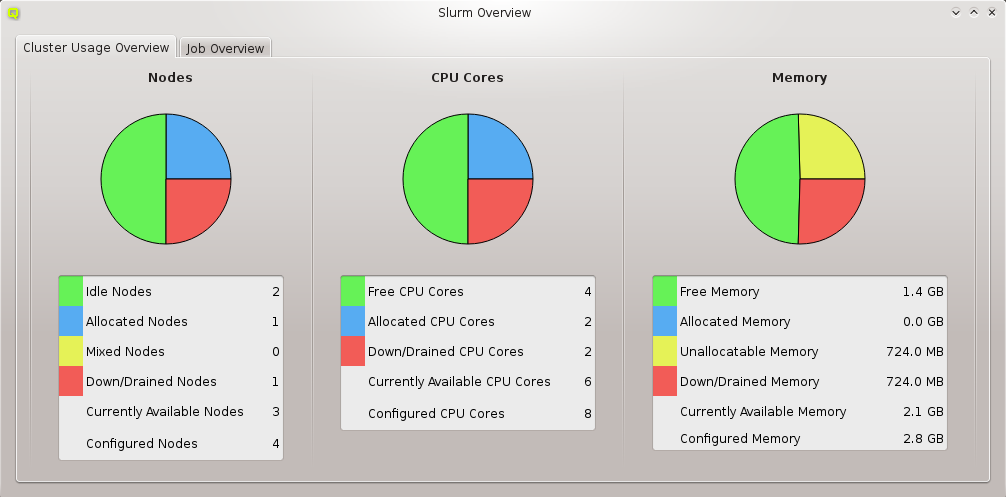

The QluMan GUI makes Slurm operation a fun task: A nice graphical overview provides the most important status information at a glance, both about general cluster utilization as well as the top 10 users running jobs.

The job management widget allows you to customize the set of properties to be displayed for running and submitted jobs and save the corresponding layouts for later reuse. Further customization of your view about active jobs is possible via editable/reusable filters, which restrict the jobs shown to ones that meet the filter criteria you specify. Changing the state of the jobs displayed in the job table is possible in many ways directly from a job’s context-menu in the list. All these features may substantially enhance the productivity of both inexperienced admins as well as seasoned pros.

QluMan’s ‘Slurm Node State’ management has its own dialog, graphically showing the slurm state (idle, drain, down, etc.) of nodes according to their LED color. It supports selecting nodes according to different criteria for either changing their slurm state (e.g. to prepare for a cluster maintenance) or restart the node’s slurmd.

Slurm reservations are easily created and modified using the QluMan reservations dialog. No need to spend time on studying the syntax to create them on the command line.

Slurm accounting operations are supported by the account manager to create and modify accounts and the user manager to add and remove users to/from accounts.

Detailed info about the cluster and its historical job usage can be retrieved using the ‘Cluster usage’ dialog. ‘Fair share’ info is shown in its own window and priorities of queued jobs may also be displayed in a dedicated widget.

Qlustar Slurm Special Features

Energy Savings from Power Management

Given compute nodes with the corresponding hardware support (e.g. IPMI), Qlustar enables you to take advantage of Slurm’s power-saving mode. When activated, this will shutdown nodes that are idle in case no jobs are waiting to be executed. Once jobs are submitted, enough nodes are started up automatically to run them. This will lower your electricity bill and is a nice contribution to a world with less carbon-dioxide or radio-active waste.

Slurm-aware Application Submit Scripts

Qlustar comes with a collection of submit scripts for a number of applications (mostly commercial third-party apps like ANSYS, LS-DYNA, StarCD, PamCrash, etc.). These scripts provide a consistent interface to cluster users and make it very easy to take full advantage of available compute resources, even for inexperienced users. Note, that this feature is available only on request. Contact us for more information.

Application License Management

Cluster users who need to run commercial applications are often negatively impacted by the license management interface of the latter. Since the number of available licenses is always limited, a common scenario is, that after having waited for a long time in the job queue, once starting to run, a job immediately terminates because of insufficient licenses. Pretty annoying for users. To prevent such situations, Qlustar supplies a Slurm license add-on that keeps track of all licenses of an application (also ones used outside of the cluster) and makes sure, that a job only starts to run, if the needed licenses are available. Note, that this feature is available only on request. Contact us for more information.

Node Access Restrictions via PAM

Many cluster sites have a policy, that users should not be allowed to have ssh access to cluster nodes per default. However, debugging a misbehaving running job often requires a login on the nodes, it runs on. For a straightforward solution to this dilemma, QluMan provides an option to enable the Slurm PAM module. This has the effect, that users can ssh only to those nodes, where one of their jobs is running.

Slurm High-Availability

Especially on large clusters or ones with mission-critical workloads, admins want to avoid down-times as much as possible. As part of the Qlustar HA Stack, a Slurm pacemaker resource type is available, that can take care of monitoring the Slurm control and DB daemons. In a correctly configured redundant head-node pair, the HAstack will make sure, that the Slurm daemons will either be restarted or automatically migrated to the fully functional node in case of hard- or software problems on the original one. Contact us, in case you need assistance, when designing or setting up such an HA configuration.

Slurm Python Interface

Qlustar comes with the Pyslurm Python interface for Slurm. This module provides convenient access to the Slurm C API via Python allowing sysadmins or users to write automation scripts in this popular programming language. Qlustar developers already contributed a number of patches to the project.

SLURM is a registered trademark of SchedMD LLC.